Certainly by now you know I’m a fan of co-location. Considering co-location is a must for any organization with a data center need under 20,000 square feet.

Having determined requirements and engaged co-location providers with an RFP, it’s now time to narrow the focus and make a decision.

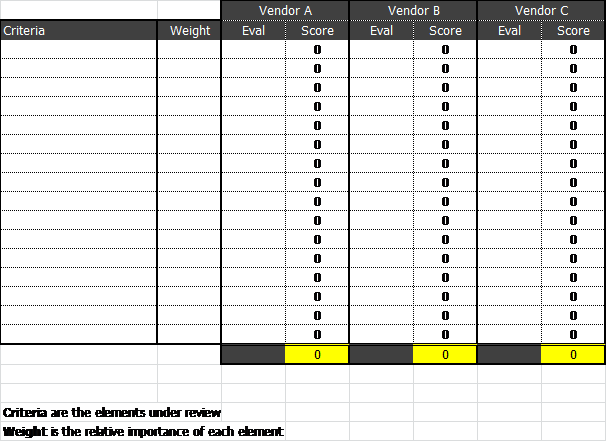

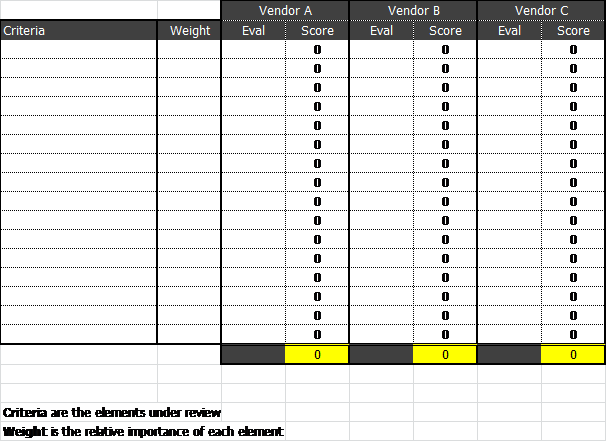

Using an evaluation matrix is a useful way to narrow the herd. In the first column, indicate your evaluation criteria (location, price, total capability, etc). Each criterion is then assigned a weight, with 1 being the lowest and 10 being the highest. Each vendor is then evaluated on a 1-10 scale, with the resulting score = weight x eval. Those scores are then added for a final…allowing comparison.

While I am a fan of an evaluation matrix, having performed this exercise dozens of times there are some issues with this approach worth discussing.

If a vendor simply doesn’t meet the requirements, they get a “low” score, yet not meeting requirements should be sufficient to disqualify. If ALL vendors miss on requirements, the chances are good either the requirements or RFP are “too tight.” It may also be indicative of a need to do an in-house data center….and in practice we rarely see this.

What is more likely is the vendors will end up being in groups. We use these groups for our analysis and next steps. The “top” group will be the ones to continue conversations with, and the bottom group is most likely a group worth passing on.

Here’s the dirty little secret. The evaluation matrix gives an objective appearance to a subjective process. You still need to go out and visit the (short) list of companies before making a final decision. We use the evaluation matrix as a tool to narrow the list, not the ultimate decision tool.

Once you’re met with vendors at their location and kicked the tires, a final evaluation matrix can be assembled.

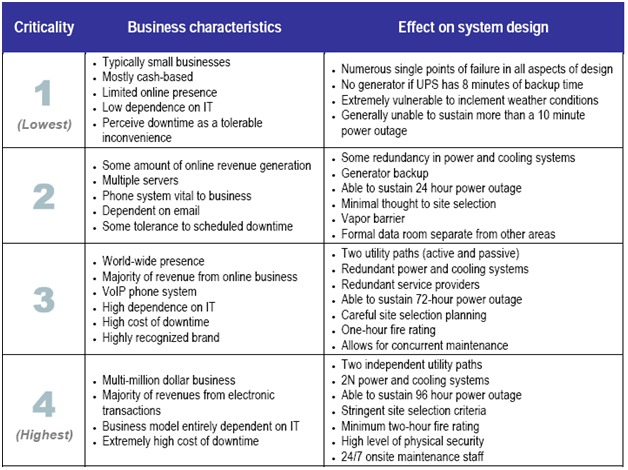

When you visit the co-location providers, take time in your evaluation. The ANSI/TIA standard 942 can be used as a guideline, or professional services can assist.

Walk around the building and get a general sense of the neighborhood and plant housekeeping. You should not be able to get close to the operating equipment at street level.

When you tour the data center, is security reasonable for your company?

Spend time understanding the Mechanical, Electrical and Plumbing (MEP) parts of the data center…this is the “guts” and arguable most important part of the facility. Is the housekeeping of the MEP pristine? I’m a big believer in taking my old car for service in the ugliest garage in town…and the MEP area shouldn’t look like that! If you see buckets under leaking valves, you can quickly realize maintenance isn’t what it should be.

Ask the hard questions….ask to see evidence of equipment maintenance (we visited a co-location provider touting regular infrared electrical panel scanning, and the last time it was performed was three years previously.) Ask for a history of outages (you may be surprised by what you discover.)

Take time to understand the communications carriers already in the building. Do not assume your carriers are already there. Co-location providers have “meet me” areas, where carriers and customers are interconnected. Cable management in this space is imperative.

Ask to speak to references…and do so. Find out if the provider is easy to work with, or a fan of up- charging for everything. Let’s face it, your equipment will break, and you may need to get a vendor to ship a part; you shouldn’t have to pay for “processing a delivery!”

Spend time on Google, and find out if there are any articles about outages/issues in the facility.

I’m a big believer in the end people buy from people…so don’t hesitate to think about whether you can simply work with the provider and their people. People will change from time to time, and the “move in” period is a very important time.

Look at the contracts. All will have protections for the provider for outages; it’s how they deal with them you need to consider.

Only when you have completed your due diligence can you make your final decision.

Monday, May 7, 2012 at 8:00AM

Monday, May 7, 2012 at 8:00AM