Everyone Wants to be the Cool Kid

Monday, May 21, 2012 at 8:00AM

Monday, May 21, 2012 at 8:00AM In this guest post, a good friend toiling in a very private company provides a thought provoking commentary on current data center design and in vogue design approaches. My friend has asked for anonymity, although I vouch for their professionalism.

When I was a kid, we had very little money. Designer jeans, a shiny new bike, or the latest fad toy: you name it. I did not have it. So, I know all too well that feeling of wanting desperately to be cool.

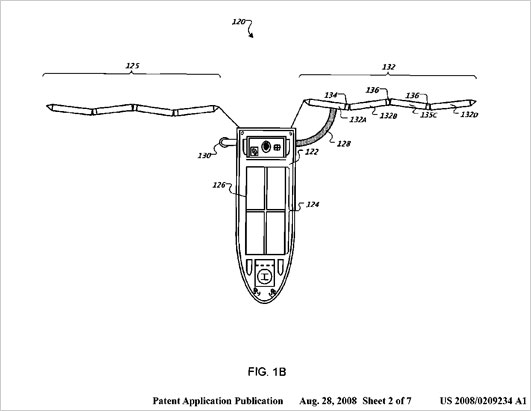

I think that is what is going on in the data center space these days. The cool kids are having all the fun. Yahoo’s chicken coop is cool as is Google’s barge concept to harness energy from the ocean waves. (Data centers are usually in particularly boring places so what data center operator wouldn’t want the opportunity to hang out at sea? Sign me up.)

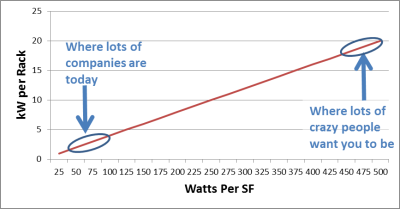

You know what else the cool kids are doing? They are jamming as much compute into a rack as the laws of physics will allow. So, we should too, right? Wrong.

Before you get all excited and tell me all about that super-cool-rack of super-cool-cloud-ready-kit that you just installed, let me clarify. I am absolutely not saying any given rack shouldn’t be filled to the brim. In fact, you should put a couple of these together and make sure to walk your prospective clients on by so that they think you are part of the “in crowd”. What I am saying however is most of us are not Amazon, Google, or Yahoo.

Most of us don’t have rack-after-rack, row-after-row, and hall-after-hall of exactly the same stuff. And our stuff probably isn’t all running the same app so we actually do care when some of it goes down. For most companies, filling a large data center from wall to wall with 20+kW footprints is what I am questioning.

Let’s play pretend, shall we?

Let’s pretend we are a big-enough company that building our own data center (rather than using a co-lo) makes sense. This is a pretty big investment and as the project manager for such an undertaking, I have a lot of things to think about. This is because data centers are a long term investment and therefore we are making decisions that are going to be with us for a very long time. For argument’s sake, let’s say 20 years. This means at the 15 year mark, we are still going to try and eek out one more technology lifecycle.

15 years is a really long time in technology.

A bit less than 15 years ago, I was walking in the woods with my dog sporting a utility belt even Batman would envy. Clipped on my belt were my BlackBerry, my cell phone, and my two way pager. And, in hopes a great shot was to be had, I was also carrying my Nikon FM2 35MM camera. Today, I carry none of these devices but my iPhone is never more than three feet away from my body and usually in a pocket.

As I walked through the woods that fine afternoon, all three items on my belt started to ‘go off’ at the same time just as I was trying to take a picture of my dog playing in a field. It was not lost on me this was quite ridiculous and”someday” greater minds than mine would certainly put an end to this madness.

I am deeply grateful for all of the people up-to-and-including Steve Jobs who brought us the iPhone. Now my operations associates don’t need to seek chiropractic help each time they are on-call.

Let’s get back to the problem of data centers.

There are three fundamental decisions we need to make:

- how resilient will our data center need to be,

- how many MW does it need to be, and

- how dense we are going to make the whitespace (the space where all of the servers and the other technology gets installed).

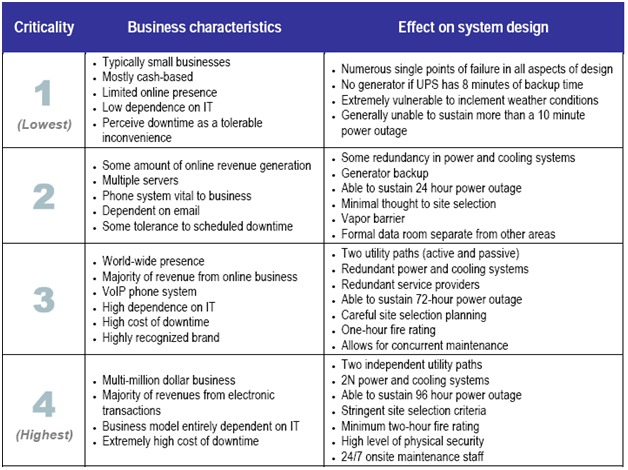

We are play pretending we are an enterprise class operation so for our discussion, so we are going to assume we need a “concurrently maintainable” data center. This might put us somewhere between a Tier 3 and Tier 4 based on the ANSI/TIA 942 or Uptime Institute’s established checklist.

We will further assume we are going to need at least a MW of power to start off and we expect that we will grow into somewhere near 4MW over time.

Only one more question to go. Power Density.

Now, if I were a consultant, maybe building something ultra-dense is part of my objective because I want to be able to talk about it in my portfolio. However, we will work under the premise I really do have the best interests of the firm at heart.

There is a metric in the Data Center world which is both infinitely valuable and amazingly useless at the same time. It is called PUE and it stands for Power Usage Effectiveness.

Quite simply, it is an indication of how much power is being “wasted” to operate the data center beyond what the IT already consumes.

In a perfect world, the PUE would be equal to 1. There would be no waste. Actually, some would suggest the goal is to have a PUE of less than one. Huh?

To accomplish this, you would have to capture some of the IT energy and use it to avoid spending energy somewhere else. For example, you would take the hot air the servers produce and use it to heat nearby homes and businesses thereby saving energy on heating fuel.

On the flip side, PUE can be ‘wicked high’. Imagine cooling a mansion to 65 degrees but never leaving the room above the garage. This is what happens when a data center is built too big too fast and there isn’t enough technology to fill the space. In fact, there are stories of companies who had to install heaters in the data center just so that the air conditioning equipment wouldn’t take back in “too cold” air.

Google and Yahoo tout PUEs in the 1.1-1.3 range. This is the current ‘gold standard’. Most reports put the average corporate PUE above 2. But this is where we start making fruit salad – comparing apples, oranges, and star fruit.

How companies calculate their PUE is always a little unclear and it is very unclear if that average is a weighted average. If not, the average of above 2 is quite suspect since the “average” data center is little more than an electrified closet (Ed. Note: or refrigerator.)

Let’s ignore PUE for now and assume two things about our company:

- our data centers are pretty full (remember PUE gets really bad if there is a lot of wasted capacity) and

- we have been doing an OK but not stellar job of managing our existing data centers.

If we were even measuring it… (I bet most companies are not)….this might place our current PUE is in the range of 1.6-1.8. This is a difference of .5.

By now, you are probably asking yourself what PUE and our question of density have to do with each other. As you meet with various design firms, they will try to sell you on their design based on the assumed resulting PUE. And, proponents of high-density data center space will tell you this will improve your PUE.

My argument is simply there are a lot of other ways to go about improving our PUE without resorting to turning our data center into a sardine can. Not overbuilding capacity, hot/cold aisle containment, simply controlling which floor tiles are open, or running the equipment just a little warmer are some immediately coming to mind. Remember we think we have a .5 PUE opportunity.

All of these things can be done with ‘low risk’ and I contend banking on high density demand in a design needing to last 20 years is folly. Yes, there is some stuff on the market which runs hot. But since we already decided we are a complex enterprise that doesn’t only have low-resiliency widgets, we will also have a bunch of stuff that runs much cooler.

For our discussion, let’s assume our existing data centers average 4kW per footprint. This puts us at about 100 watts a SF: at the high end of most data center space but not bleeding edge by any means. I have seen some footprints pushing over into double-digits but these are offset by lower density areas of the data center (patch fields, network gear, open floor space, etc.).

Another component that isn’t breaking the power bank is storage. Storage arrays are somewhere south of 10kW per rack and seem pretty steady. A smart person once told me we get about twice as much disk space in a rack every two years for about the same amount of power. And, that assumes we keep buying spinning disk for the next 20 years. Some of the latest pc/laptop systems are loaded with flash drives and these have even started making their way into some data centers. In a recent Computerworld article, a discussion around a particular flash storage array put the entire rack at 1.4kW. Granted, these are pricy right now but such is the joy of Moore’s Law. Again, 20 years is a long time.

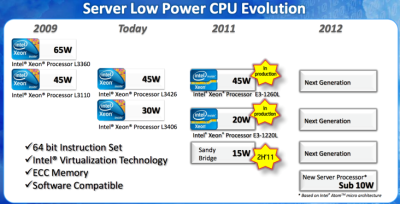

We should also consider all of the talk about ‘green IT’ in the compute space. Vendors “get it”. There is much discussion today about how much compute power per watt a system provides. This is great. If we measure it, we can understand it. If we understand it, we will manage it.

Intel has talked about chips that will deliver a 20X power savings. Remember that super cool (hot) rack with 22kW of stuff jammed in? What if it only took 1.1kW? And what if that happens 5 years into or 20 year data center life?

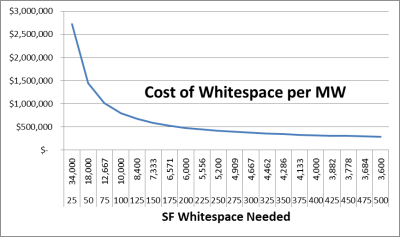

The sales pitch may also try to sell you on cheaper construction due to the smaller whitespace footprint but this is just silly unless you are in downtown Manhattan. I read something once that put the price of ‘white space’ at $80/sf. This would mean that building a MW at 100 watts/sf would cost $800K versus $288K for the sardine can.

This is a bit more than a $0.5MM per MW. To put this in perspective, I would put the price tag at  $35MM/MW including the building, the MEP, racks, cabling, core network, etc. So we are talking about 1.4% of the cost. And, since we are going to keep this investment for 20 years, we are only talking about ~25K per year in “excess” depreciated build cost per MW.

$35MM/MW including the building, the MEP, racks, cabling, core network, etc. So we are talking about 1.4% of the cost. And, since we are going to keep this investment for 20 years, we are only talking about ~25K per year in “excess” depreciated build cost per MW.

And, let’s pretend we buy into the assumption this is going to cost us some opportunity on our PUE. This is important because paying the electric company is something that happens every year. If we put our data center someplace reasonable, a .1 PUE difference might be worth around another $40K. This assumes $0.06 kw/hr power which honestly we should be able to beat.

(1000kW DC) * (80% Life time Average Utilization) * (.1 PUE Impact) * (365*24 Hours) * (.06 per kW/hr)

= $42,048

So, we are out of pocket as much as $65K per year per MW. While, I understand this is real money. I still say, even if that PUE difference is real, it seems like a small amount to pay for future-proofing our 20 year investment.

Even though they supposedly didn’t paint the fuselage to save weight rather than to save money, maybe we could take a lesson from the space shuttle and cut back somewhere else. Somewhere that doesn’t lead us to treating our technology like salty fish.

Just saying.

From Google’s Data Barge Patent

From Google’s Data Barge Patent